Analyze a dataset in memory#

Here, we’ll analyze the growing dataset by loading it into memory.

This is only possible if it’s not too large. If you deal with particularly large data, please read the guide on iterating over datta batches (to come).

import lamindb as ln

import lnschema_bionty as lb

import anndata as ad

💡 loaded instance: testuser1/test-scrna (lamindb 0.55.0)

ln.track()

💡 notebook imports: anndata==0.9.2 lamindb==0.55.0 lnschema_bionty==0.31.2 scanpy==1.9.5

💡 Transform(id='mfWKm8OtAzp8z8', name='Analyze a dataset in memory', short_name='scrna4', version='0', type=notebook, updated_at=2023-10-04 16:40:56, created_by_id='DzTjkKse')

💡 Run(id='RkXUcxbGTRy8Pu1QrH1l', run_at=2023-10-04 16:40:56, transform_id='mfWKm8OtAzp8z8', created_by_id='DzTjkKse')

hello

within hello

ln.Dataset.filter().df()

| name | description | version | hash | reference | reference_type | transform_id | run_id | file_id | storage_id | initial_version_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||

| D1s4vH9rGh45eJ13uQiV | My versioned scRNA-seq dataset | None | 1 | WEFcMZxJNmMiUOFrcSTaig | None | None | Nv48yAceNSh8z8 | zyBkh49M3qcVFVbvsvZ5 | D1s4vH9rGh45eJ13uQiV | None | None | 2023-10-04 16:39:54 | DzTjkKse |

| D1s4vH9rGh45eJ13uQVj | My versioned scRNA-seq dataset | None | 2 | us9ZADbZkmjSnRqVFu2W | None | None | ManDYgmftZ8Cz8 | 5BdVWAv5slv0iNhEPo6T | None | None | D1s4vH9rGh45eJ13uQiV | 2023-10-04 16:40:38 | DzTjkKse |

dataset = ln.Dataset.filter(name="My versioned scRNA-seq dataset", version="2").one()

dataset.files.df()

hello

within hello

hello

hello

| storage_id | key | suffix | accessor | description | version | size | hash | hash_type | transform_id | run_id | initial_version_id | updated_at | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||

| D1s4vH9rGh45eJ13uQiV | 62dM2FBg | None | .h5ad | AnnData | Conde22 | None | 28049505 | WEFcMZxJNmMiUOFrcSTaig | md5 | Nv48yAceNSh8z8 | zyBkh49M3qcVFVbvsvZ5 | None | 2023-10-04 16:39:54 | DzTjkKse |

| UKDwd6U73Y9ZbFkPS4IC | 62dM2FBg | None | .h5ad | AnnData | 10x reference adata | None | 660792 | GU-hbSJqGkENOxVKFLmvbA | md5 | ManDYgmftZ8Cz8 | 5BdVWAv5slv0iNhEPo6T | None | 2023-10-04 16:40:30 | DzTjkKse |

If the dataset doesn’t consist of too many files, we can now load it into memory.

Under-the-hood, the AnnData objects are concatenated during loading.

The amount of time this takes depends on a variety of factors.

If it occurs often, one might consider storing a concatenated version of the dataset, rather than the individual pieces.

adata = dataset.load()

hello

within hello

The default is an outer join during concatenation as in pandas:

adata

AnnData object with n_obs × n_vars = 1718 × 36508

obs: 'donor', 'tissue', 'cell_type', 'assay', 'n_genes', 'percent_mito', 'louvain', 'file_id'

obsm: 'X_umap', 'X_pca'

The AnnData has the reference to the individual files in the .obs annotations:

adata.obs.file_id.cat.categories

Index(['D1s4vH9rGh45eJ13uQiV', 'UKDwd6U73Y9ZbFkPS4IC'], dtype='object')

We can easily obtain ensemble IDs for gene symbols using the look up object:

genes = lb.Gene.lookup(field="symbol")

hello

genes.itm2b.ensembl_gene_id

'ENSG00000136156'

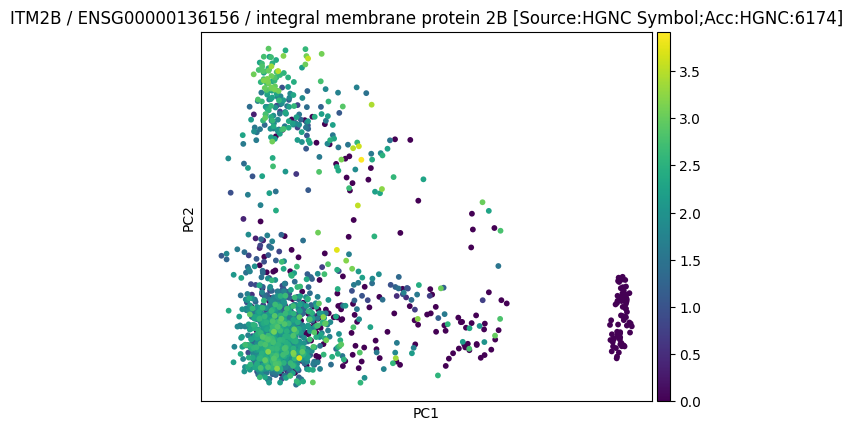

Let us create a plot:

import scanpy as sc

sc.pp.pca(adata, n_comps=2)

2023-10-04 16:41:00,818:INFO - Failed to extract font properties from /usr/share/fonts/truetype/noto/NotoColorEmoji.ttf: In FT2Font: Can not load face (unknown file format; error code 0x2)

2023-10-04 16:41:00,951:INFO - generated new fontManager

sc.pl.pca(

adata,

color=genes.itm2b.ensembl_gene_id,

title=(

f"{genes.itm2b.symbol} / {genes.itm2b.ensembl_gene_id} /"

f" {genes.itm2b.description}"

),

save="_itm2b",

)

WARNING: saving figure to file figures/pca_itm2b.pdf

file = ln.File("./figures/pca_itm2b.pdf", description="My result on ITM2B")

file.save()

file.view_flow()

hello

within hello

hello

within hello

hello

within hello

hello

within hello

hello

hello

hello

hello

hello

hello

within hello

hello

within hello

hello

within hello

hello

within hello

hello

hello

hello

hello

hello

hello

hello

within hello

hello

within hello

hello

within hello

hello

within hello

hello

hello

hello

hello

hello

within hello

hello

within hello

hello

within hello

hello

within hello

hello

hello

hello

hello

hello

hello

hello

within hello

hello

within hello

hello

within hello

hello

within hello

hello

hello

hello

hello

hello

hello

hello

hello

hello

Given the image is part of the notebook, there isn’t an actual need to save it and you can also rely on the report that you’ll create when saving the notebook via the command line via:

lamin save <notebook_path>